Btune: Intelligent Data Compression

Effective data compression is not a one-size-fits-all problem. The optimal combination of compression parameters depends on data type (e.g., numeric, text), structure, use case, acquisition context, and other characteristics. A poor choice can severely degrade performance or even render an application unusable. Furthermore, due to effects such as concept drift, the optimal parameters may evolve over time.

Exhaustively exploring the parameter space is both time-consuming and operationally expensive. There is a clear need to automate this process and to define objective criteria for what “best” means in a given context. Btune is ironArray’s answer to this challenge.

What Is Btune?

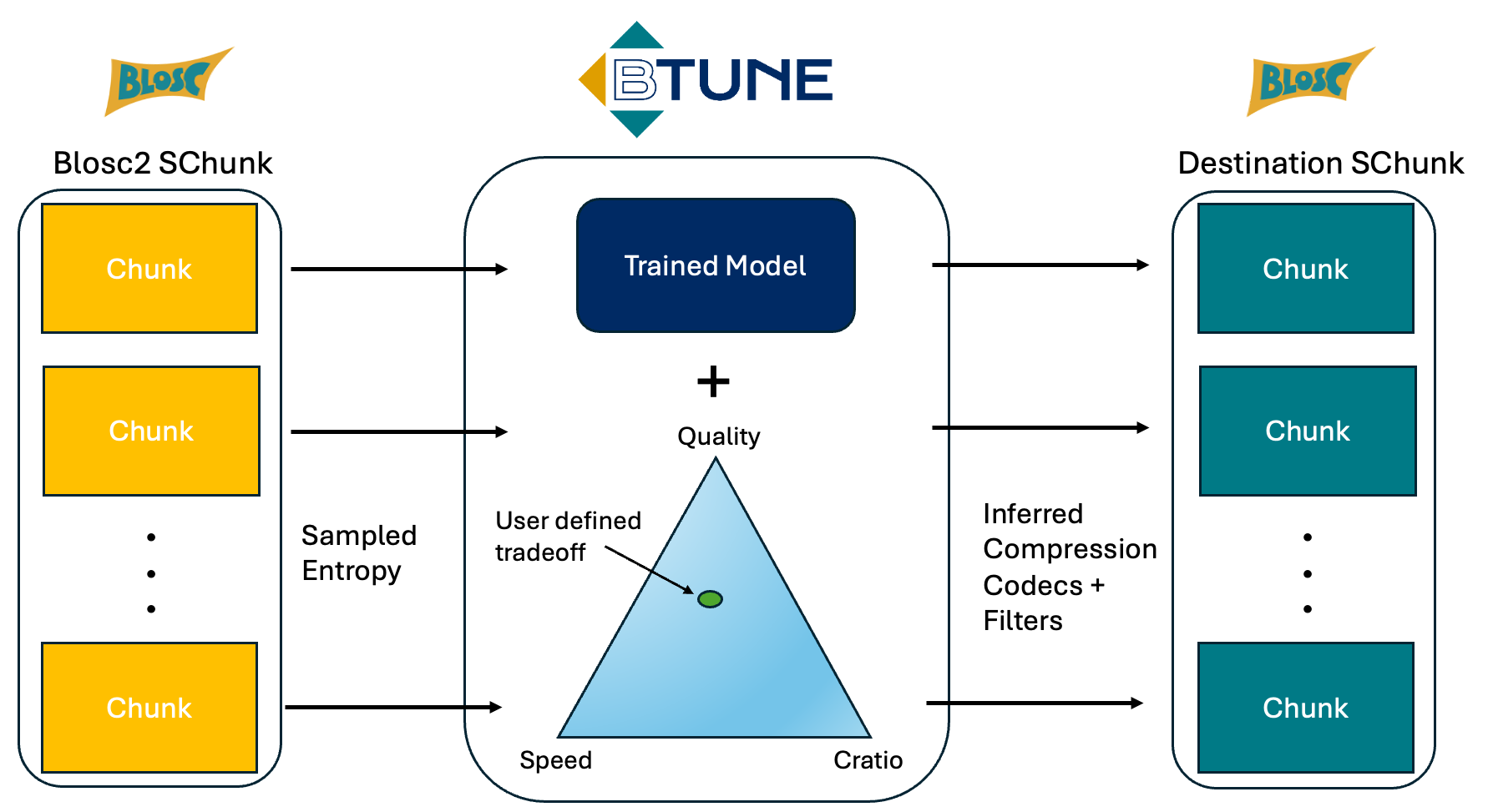

Btune is a Blosc2 plugin that uses machine- and deep-learning techniques to determine the optimal compression parameters for your datasets. We offer three tiers to address a range of use cases:

-

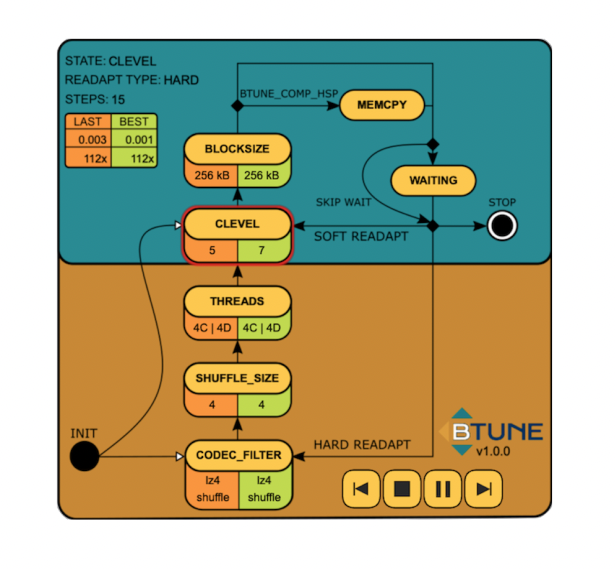

Btune Community: A free edition for personal use. It employs a genetic algorithm to efficiently explore parameter combinations and identify high‑quality settings for your dataset. For a graphical visualization, click the image on the right.

-

Btune Models: A commercial license for teams that work with relatively homogeneous data. Using your sample data, we train and deploy a custom neural network model optimized for your workload, providing fast and accurate parameter recommendations.

-

Btune Studio: A full commercial solution that includes our training software, giving you complete control to create, train, and deploy your own models on‑site for an unlimited variety of datasets.

Why Btune?

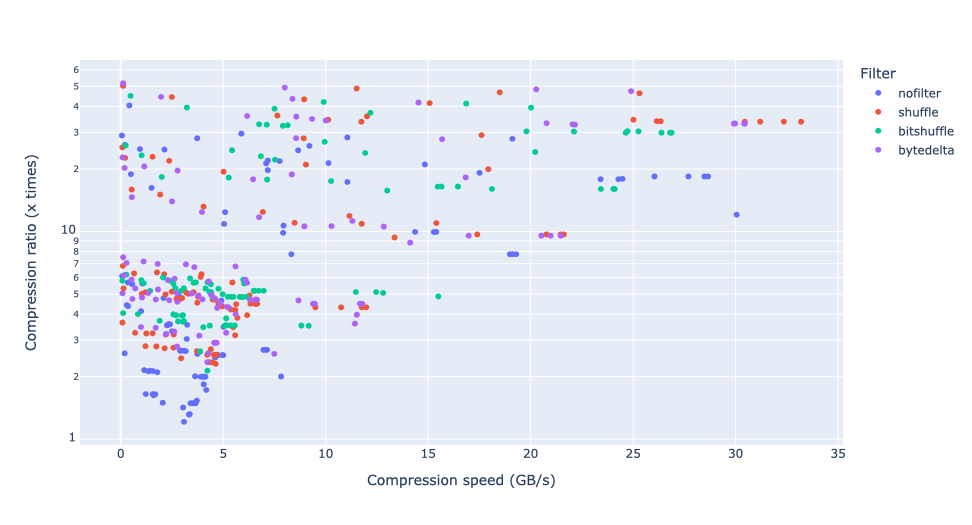

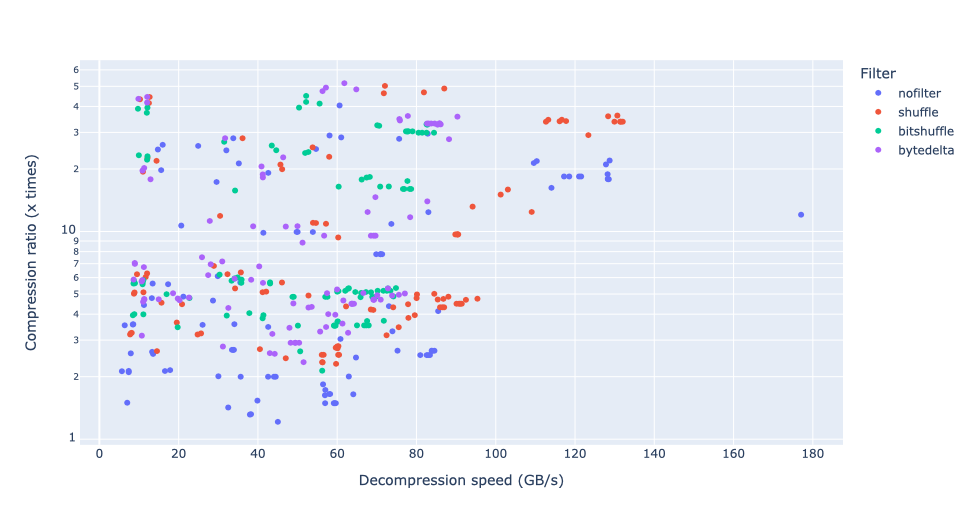

The primary trade-off in data compression is between compression ratio and speed. For example, high‑throughput data acquisition often prioritizes fast compression, while frequently accessed datasets benefit more from rapid decompression. Btune enables you to systematically optimize for the metrics that matter most to your application.

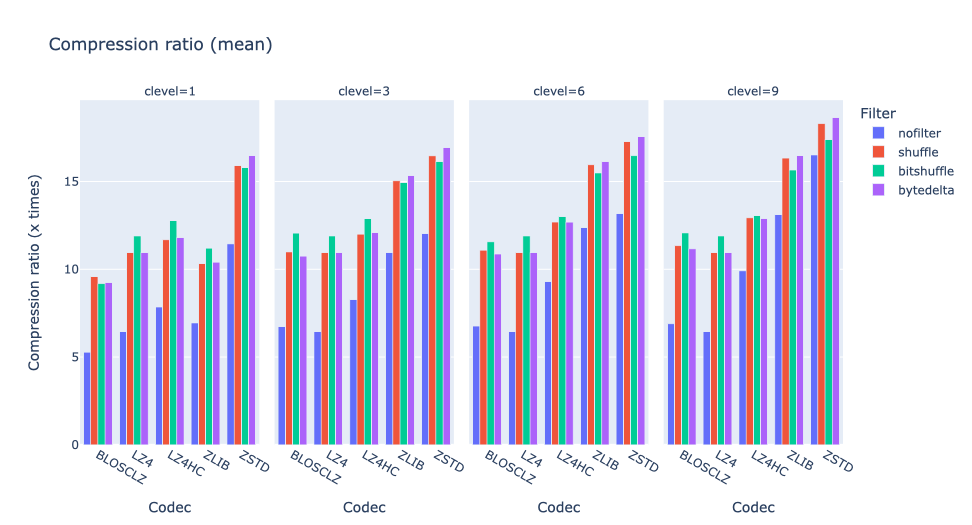

The following figures illustrate these trade‑offs for different codecs and filters using chunks of weather data:

And here, the different codecs and filters are compared in terms of compression ratio:

With Btune, you can identify the optimal combination of compression parameters (in the Pareto sense) for your datasets, achieving the best possible balance between compression ratio and speed for your specific requirements.

How To Use

Ready to optimize your compression? Getting started with Btune is straightforward. Install the plugin directly from PyPI:

pip install blosc2-btune

This single plugin supports both Btune Community and Btune Models. For detailed instructions, refer to the Btune README, or contact us. To use Btune Studio, additional software for on‑site model training is required; please contact us for setup.

The Btune plugin is currently available for Linux and macOS on Intel architectures, with support for additional platforms under development.

Explore our hands-on tutorials to see Btune in action:

Note: To complete the Studio tutorial, you will need to contact us to obtain the additional software required for model training.

What's in a Model?

Neural networks are highly effective parametric models for learning from complex data. During training, the network is repeatedly presented with paired inputs and outputs (e.g., data features, compression parameters, and resulting compression ratios) and automatically adjusts its internal parameters to improve predictive accuracy. Once trained, it can rapidly generate predictions for new, unseen data.

Btune packages the result of this process into a “model,” stored as a small set of files (in JSON and TensorFlow format). You can place these files anywhere on your system for Btune to use. Btune uses the model to instantly predict the best compression parameters for each chunk of your data, based on its characteristics. This real‑time prediction capability makes Btune particularly well‑suited for optimizing compression in high‑volume data streams.

A Starry Example

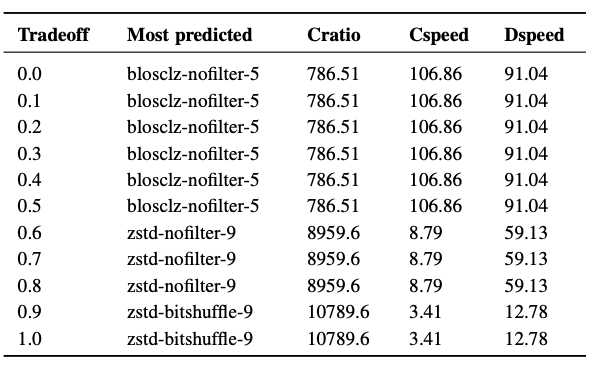

The figures below illustrate Btune’s optimization for decompression speed on a 7.3 TB subset of the Gaia dataset. The first image shows the predicted optimal codec and filter combinations for this objective, as a function of the desired trade‑off between decompression speed and compression ratio.

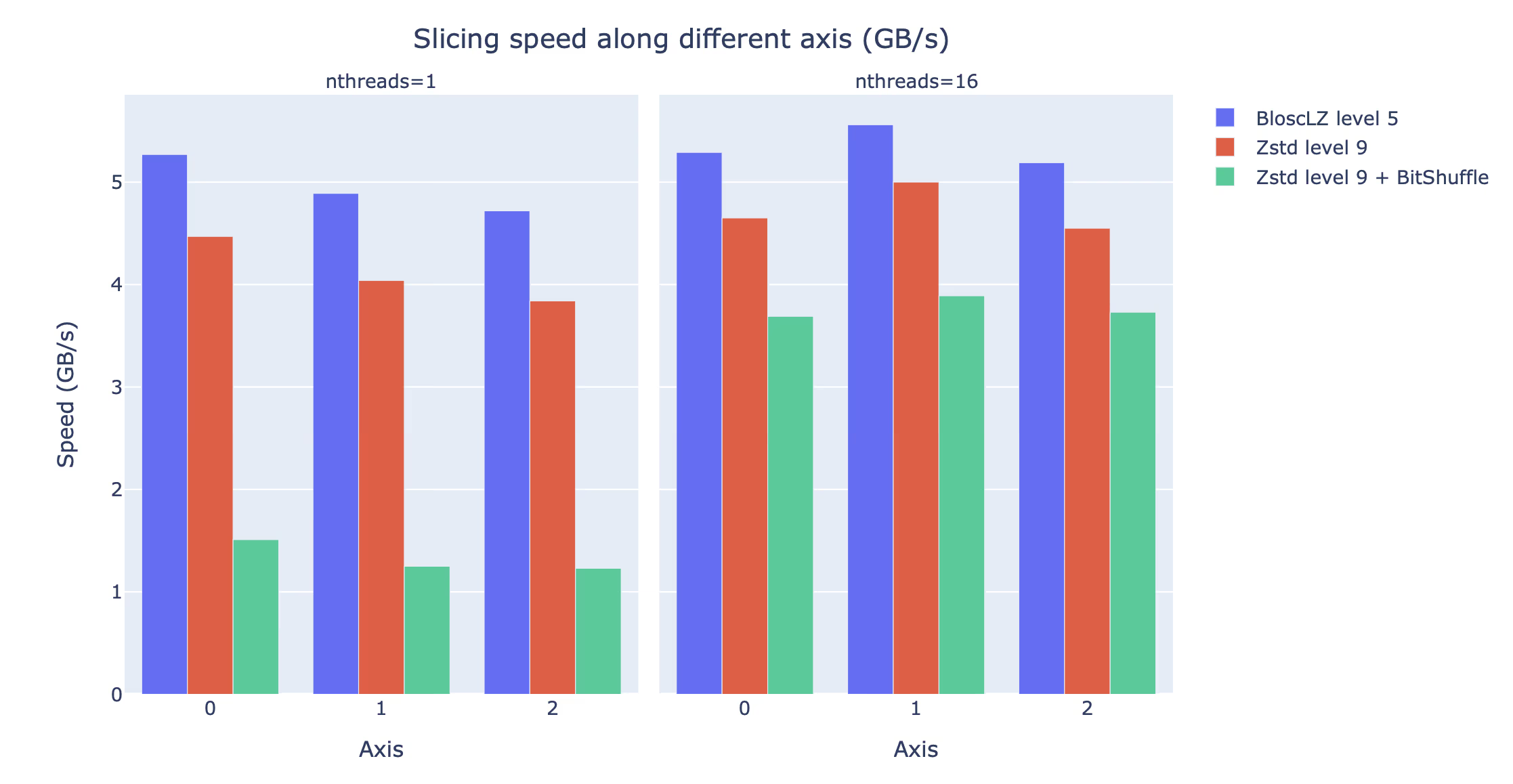

The following image plots the I/O speed (in GB/s) achieved when accessing multiple multidimensional slices of the Gaia dataset along different axes when using these combinations (higher values are better).

The results show that the codec/compression level combinations BloscLZ (level 5) and Zstd (level 9) are fastest. Since their performance is not heavily dependent on the number of threads, they perform well even on machines with fewer CPU cores.

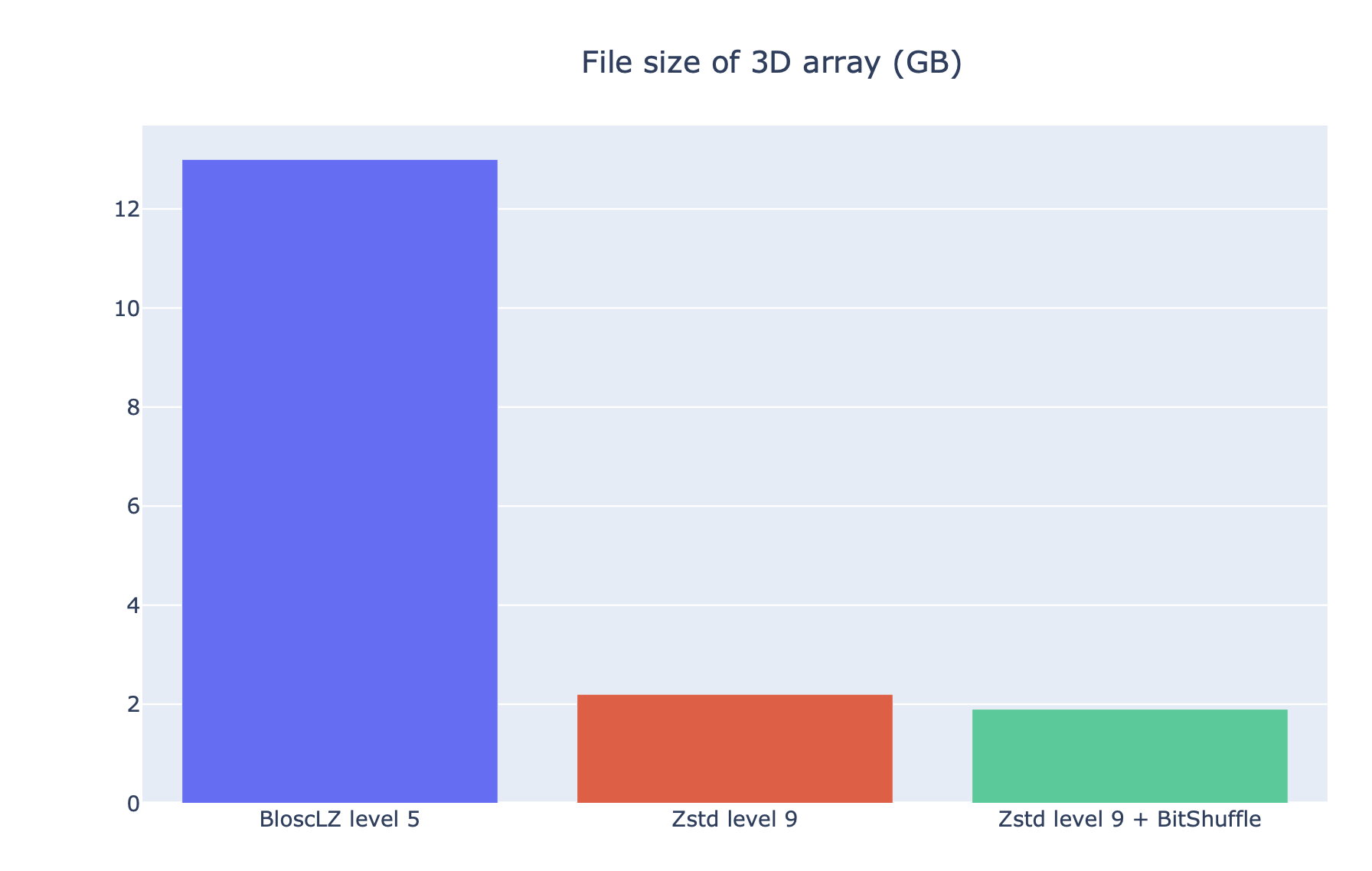

Finally, the last figure compares the resulting file sizes (in GB)—i.e. how small the files have been compressed down to. Lower values are better.

In this case, the trained model recommends Zstd (level 9) as a strong compromise between file size and decompression speed. While adding the BitShuffle filter yields the highest compression ratio, it is not generally recommended due to its impact on overall performance.

For more details, see our paper for SciPy 2023 (slides). The data and scripts are also available on GitHub.

Pricing

Visit our pricing page for detailed information on the available Btune licensing options.

Testimonials

Blosc2 and Btune are fantastic tools that allow us to efficiently compress and load large volumes of data for the development of AI algorithms for clinical applications. In particular, the new NDarray structure became immensely useful when dealing with large spectral video sequences.

-- Leonardo Ayala, Div. Intelligent Medical Systems, German Cancer Research Center (DKFZ)

Btune is a simple and highly effective tool. We tried this out with @LEAPSinitiative data and found some super useful spots in the parameter space of Blosc2 compression arguments! Awesome work, @Blosc and @ironArray teams!

-- Peter Steinbach, Helmholtz AI Consultants Team Lead for Matter Research @HZDR_Dresden

Contact

If you are interested in Btune or have any questions, please contact us at contact@ironarray.io.