Python-Blosc2 4.0: Unleashing Compute Speed with miniexpr

We are thrilled to announce the immediate availability of Python-Blosc2 4.0. This major release represents a significant architectural leap forward: we have given new powers to the internal compute engine by adding support for miniexpr, so it is possible now to evaluate expressions on blocks rather than chunks.

The result? Python-Blosc2 is now not just a fast storage library, but a compute powerhouse that can outperform specialized in-memory engines like NumPy or even NumExpr, even while handling compressed data.

Beating the Memory Wall (Again)

In our previous post, The Surprising Speed of Compressed Data: A Roofline Story, we showed how Blosc2 outruns the competition for out-of-core workloads, but for in-memory, low-intensity computations it often lagged behind Numexpr. Our faith in the compression-first Blosc2 paradigm, which is optimized for cache hierarchies, motivated the development of miniexpr. This is a purpose-built, thread-safe evaluator with vectorization capabilities, SIMD acceleration for common math functions and following NumPy conventions for type inference and promotions. We didn't just optimize existing code; we built a new engine from scratch to exploit modern CPU caches.

As a result, Python-Blosc2 4.0 improves greatly on earlier Blosc2 versions for memory-bound workloads:

- The new miniexpr path dramatically improves low-intensity performance in memory.

- The biggest gains are in the very-low/low kernels where cache traffic dominates.

- High-intensity (compute-bound) workloads remain essentially unchanged, as expected.

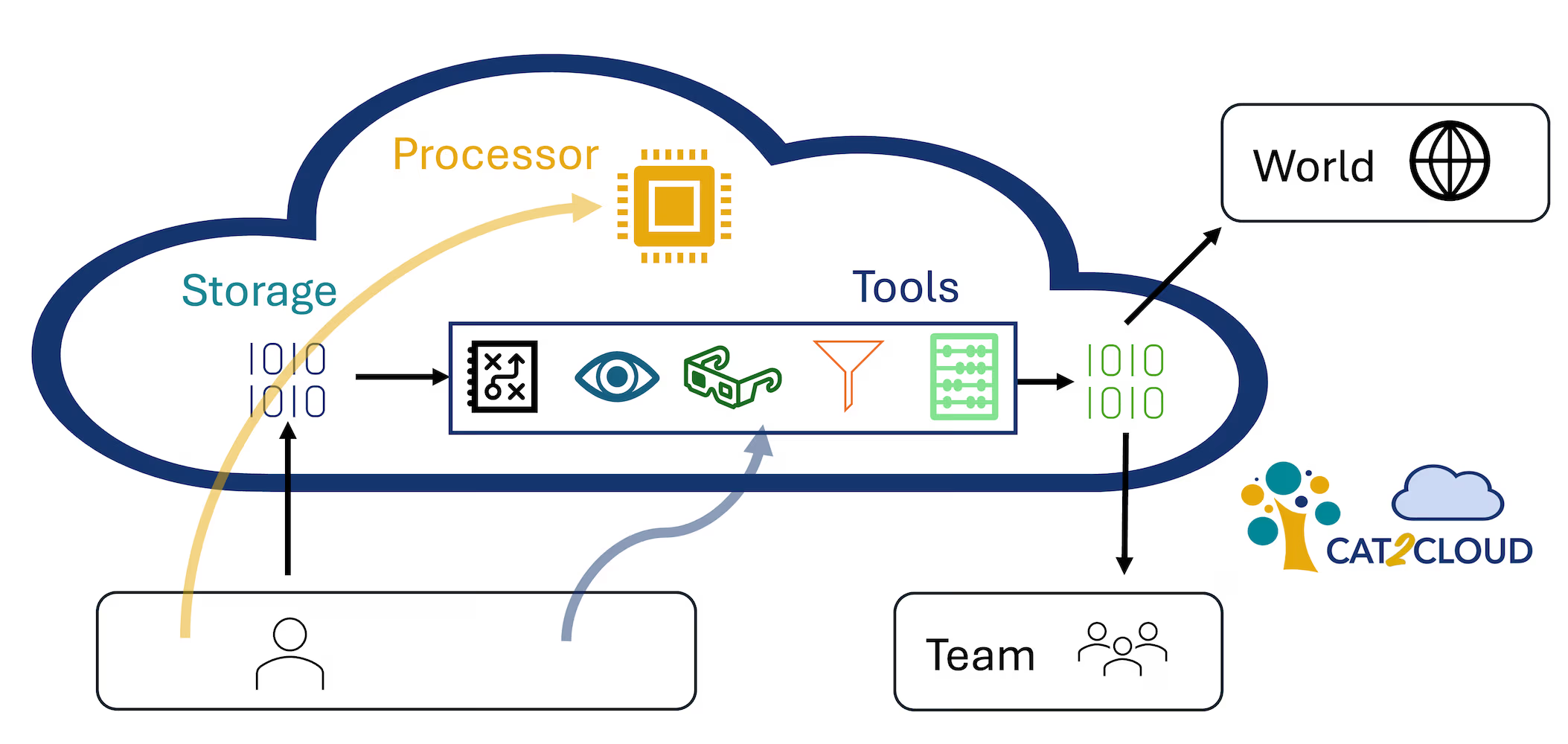

- Real-world applications like Cat2Cloud see immediate speedups (up to 4.5x) for data-intensive operations.

Keep reading to learn more about the results.